√画像をダウンロード adashare learning what to share for efficient deep multi-task learning 263668

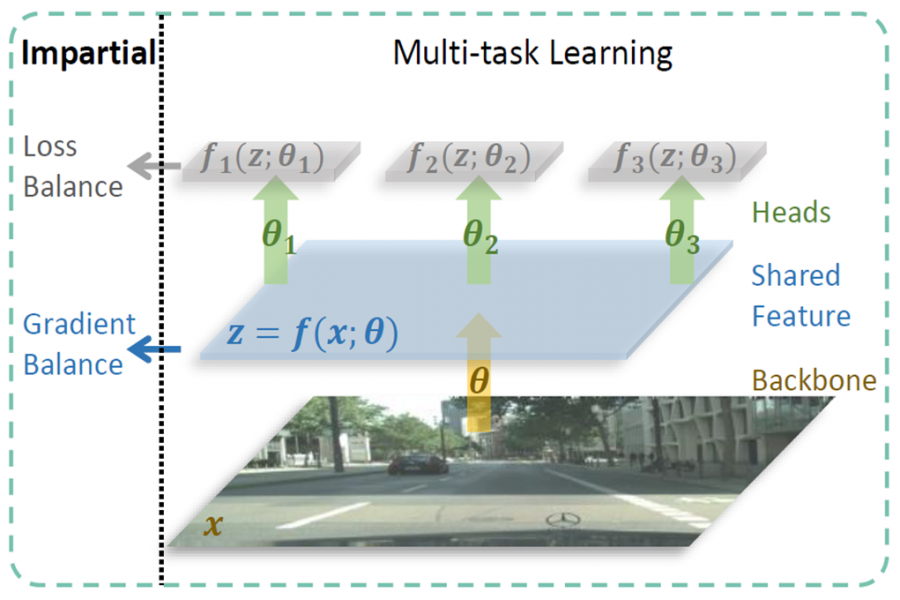

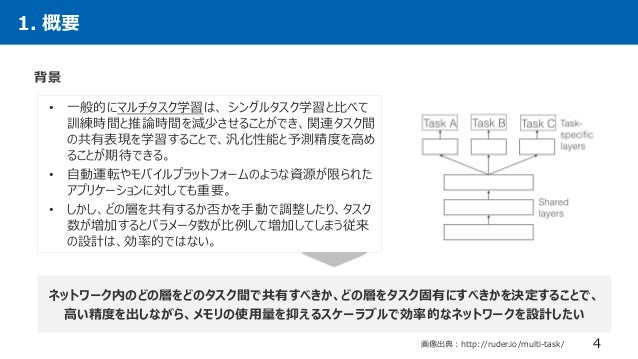

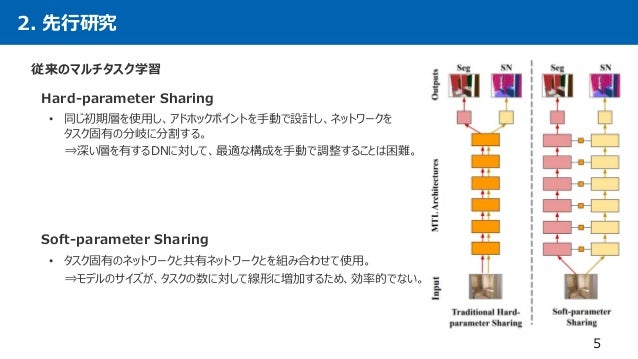

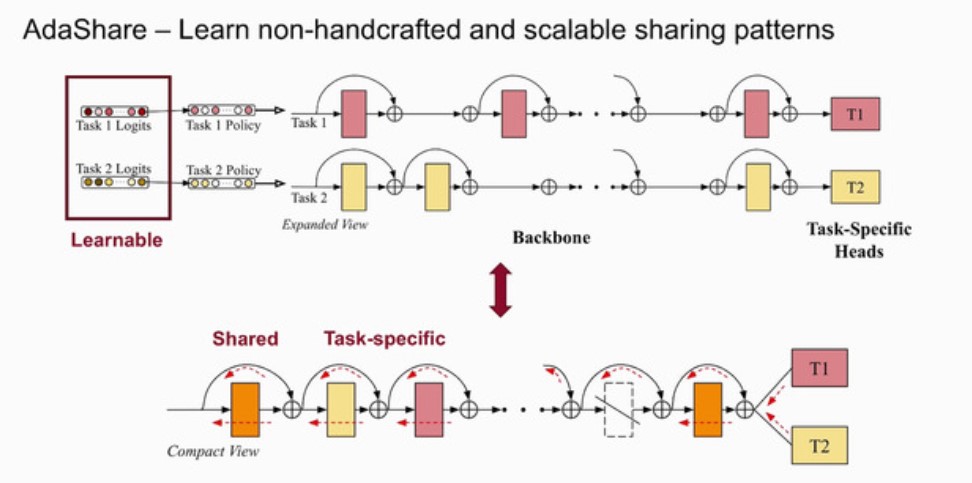

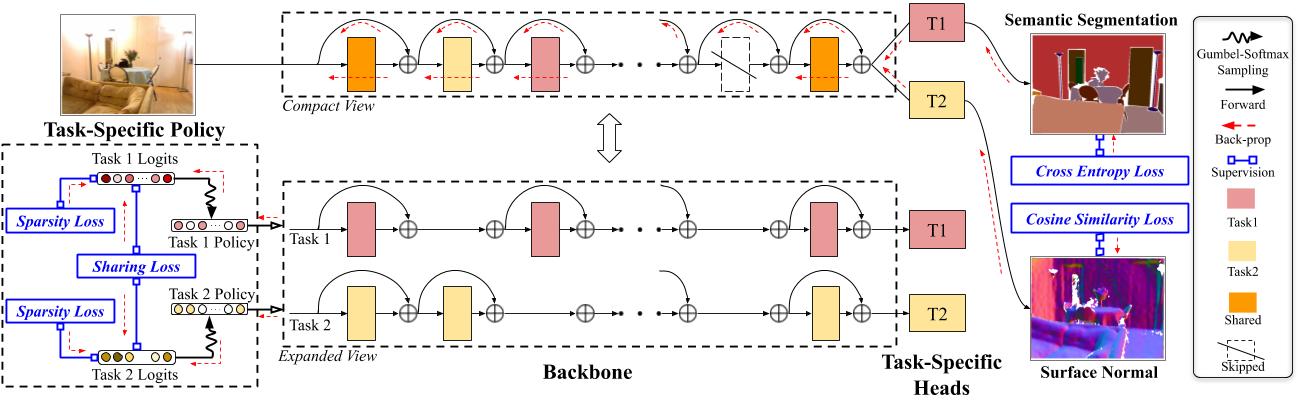

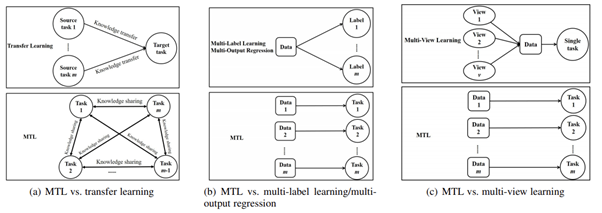

Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, weMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, weRealTime Semantic Stereo Matching Friday

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Adashare learning what to share for efficient deep multi-task learning

Adashare learning what to share for efficient deep multi-task learning-AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun 1Rameswar Panda 2Rogerio Feris Kate Saenko; AdaShare Learning What To Share For Efficient Deep MultiTask Learning Friday December 13th, 19 Friday January 10th, kawanokana, 共有 Click to share on Twitter (Opens in new window) Click to share on Facebook (Opens in new window) Click to share on Google (Opens in new window) Like this Like Loading Post navigation SlowFast Networks for

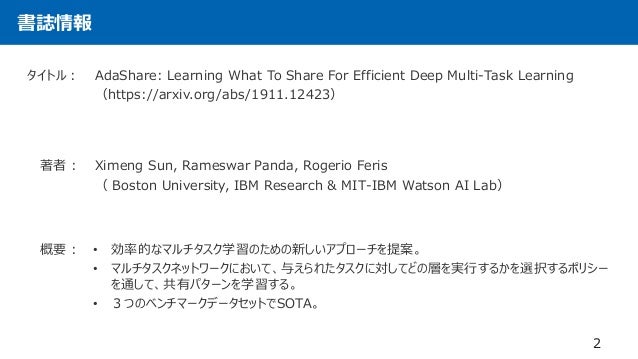

2

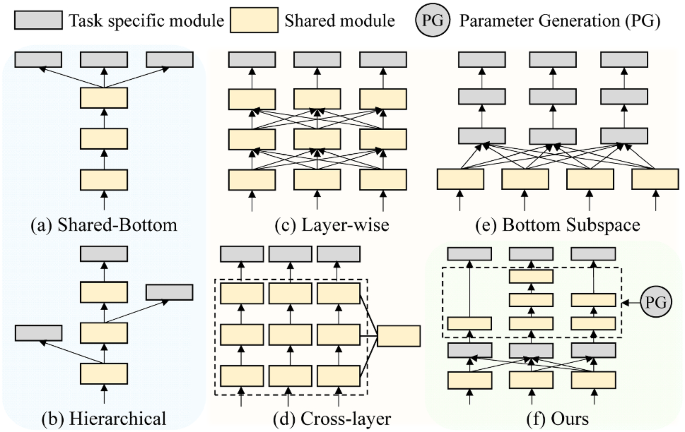

Title AdaShare Learning What To Share For Efficient Deep MultiTask Learning Authors Ximeng Sun, Rameswar Panda, Rogerio Feris (Submitted on (this version), latest version ) Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks Title AdaShare Learning What To Share For Efficient Deep MultiTask Learning Authors Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko Download PDF Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share Hard parameter sharing in multitask learning (MTL) allows tasks to share some of model parameters, reducing storage cost and improving prediction accuracy The common sharing practice is to share bottom layers of a deep neural network among tasks while using separate top layers for each task In this work, we revisit this common practice via

Learning with whom to share in multitask feature learning In ICML, 11 • 31 Shikun Liu, Edward Johns, and Andrew J Davison Endtoend multitask learning with attention In CVPR, 19 • 37 Pushmeet Kohli Nathan Silberman, Derek Hoiem and Rob Fergus Indoor segmentation and support inference from rgbd images In ECCV, 12 • 47 Trevor Standley,ダウンロード済み√ adashare learning what to share for efficient deep multitask learning Adashare learning what to share for efficient deep multitask learning Clustered multitask learning A convex formulation In NIPS, 09 • 23 Zhuoliang Kang, Kristen Grauman, and Fei Sha Learning with whom to share in multitask feature learning In ICML, 11 •AdaShare Learning What To Share For Efficient Deep MultiTask Learning Multitask learning is an open and challenging problem in computer vision Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides what to share across which tasks 2 6 15 Wed Computer Vision EndtoEnd MultiTask Learning with Attention 1 3 13

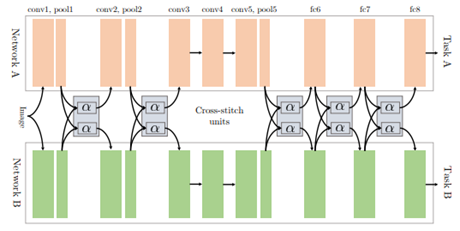

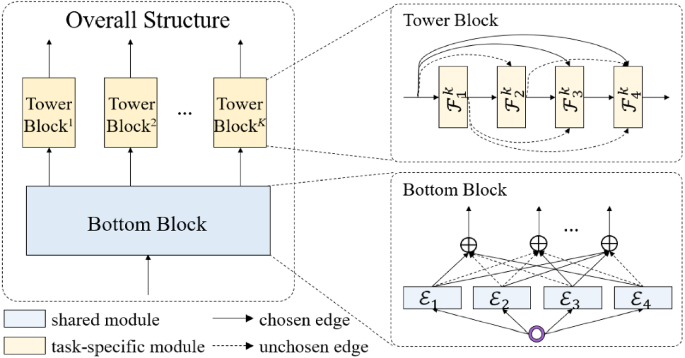

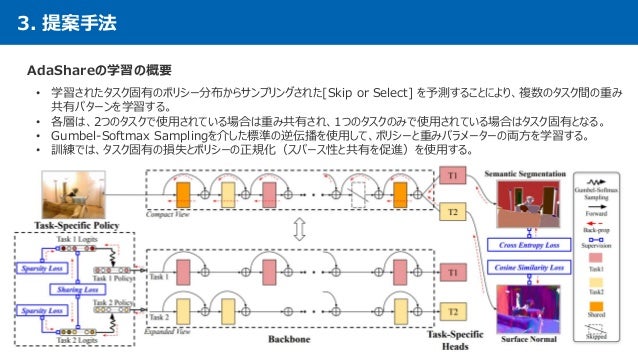

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Introduction Hardparameter Sharing AdvantagesScalable DisadvantagesPreassumed tree structures, negative transfer, sensitive to task weights Softparameter Sharing AdvantagesLessnegativeinterference (yet existed), better performance Disadvantages Not ScalableSlowFast Networks for Video Recognition AdaShare Learning What To Share For Efficient Deep MultiTask Learning ∙ by Ximeng Sun, et al ∙ 0 ∙ share Multitask learning is an open and challenging problem in computer visionThe typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Papertalk The Platform For Scientific Paper Presentations

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Motivation Generally in MTL, one of two approaches is used One is hard parameter sharing, in which initial layers are shared up until a certain point after which the network branches out to make predictions for individual tasks The problem with this approach is that it forces the machine learningAdaShare Learning What To Share For Efficient Deep Multi gogoteam Instagram posts Gramhocom AMUL DASHARE (@ADashare) Twitter AdaShare Learning What To Share For Efficient Deep Multi Adashare Cardano USD (ADAUSD) Stock Price, News, Quote & History SomeByMiMiracleSerum Instagram posts Gramhocom bggfdd Watch MAUMAUzk Short Clips Video on Nimo TV PDF AdaShare1Boston University, 2MITIBM Watson AI Lab, IBM Research {sunxm, saenko}@buedu, {rpanda@, rsferis@us}ibmcom Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

AdaShare Learning What To Share For Efficient Deep MultiTask Learning AdaShare Learning What To Share For Efficient Deep MultiTask Learning Computer Vision Multimodal Learning Approximate CrossValidation for Structured Models Approximate CrossValidation for Structured Models Bayesian Modeling MCUNet Tiny Deep Learning on IoT Devices MCUNet Tiny Deep LearningAdaShare Learning What To Share For Efficient Deep MultiTask Learning AdaShare/READMEmd at master sunxm2357/AdaShareコレクション adashare learning what to share for efficient deep multitask learning Adashare learning what to share for efficient deep multitask learning

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

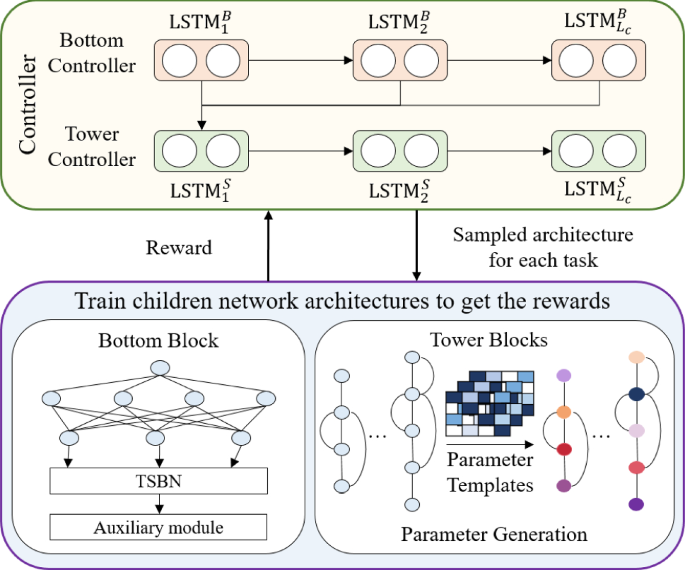

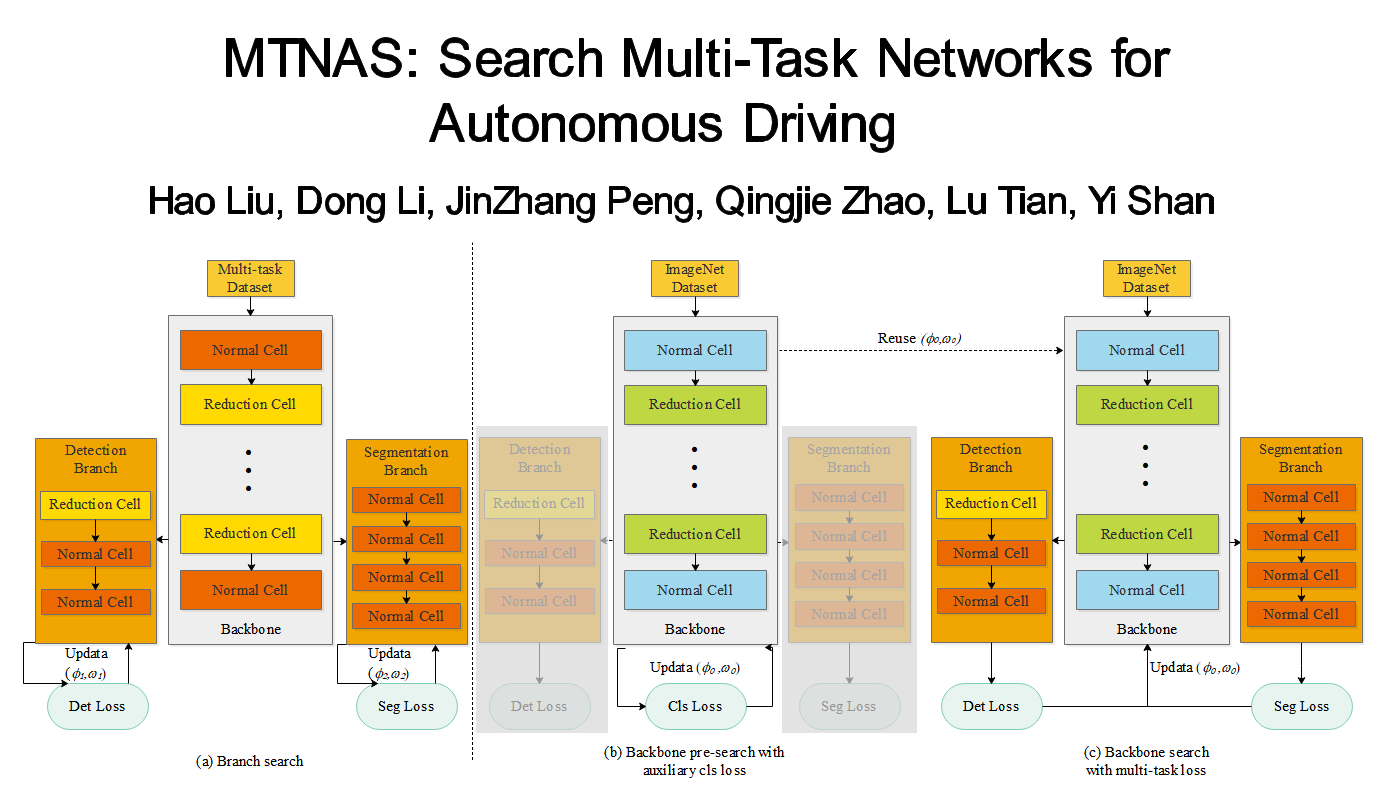

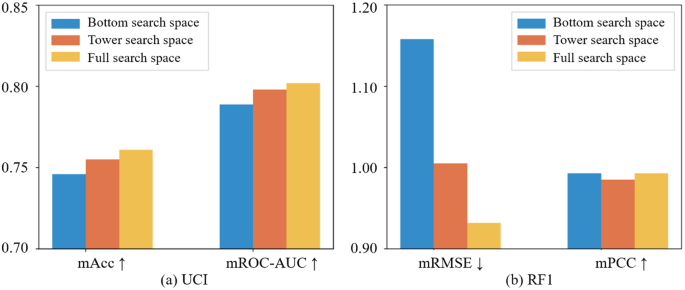

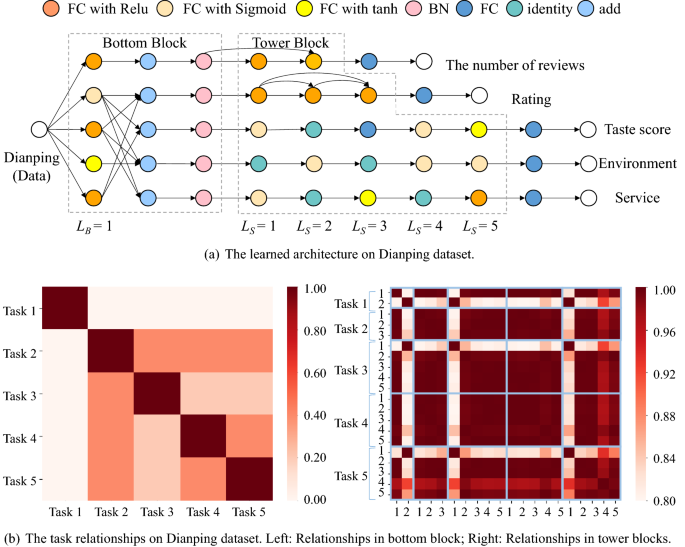

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

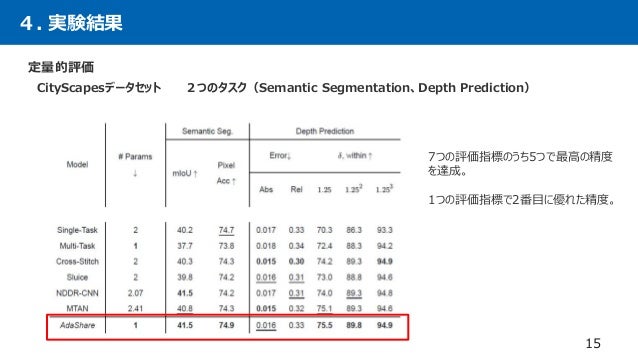

Contact Q & A;AdaShare Learning What To Share For Efficient Deep MultiTask Learning Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separateTable 6 Ablation Studies on CityScapes 2Task Learning T1 Semantic Segmentation, T2 Depth Prediction "AdaShare Learning What To Share For Efficient Deep MultiTask Learning"

2

2

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko Poster Session 4 (more posters) on T T GatherTown Deep learning ( Town Spot D0 ) Join GatherTown Only iff poster is crowded, join Zoom Authors have to start the Deep Learning JP Discover the Gradient Search home members;Title AdaShare Learning What To Share For Efficient Deep MultiTask Learning Authors Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko (Submitted on , last revised (this version, v2)) Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

AdaShare Learning What To Share For Efficient Deep MultiTask Learning By Ximeng Sun, Rameswar Panda, Rogerio Feris and Kate Saenko Get PDF (2 MB) Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initialDeep Learning for NLP講座;E Adashare learning to what share what deep Multitaskis img Learning to Branch for MultiTask Learning DeepAI X sun, r r panda, k arxiv saenko Preprint 11, 1919 img GitHub sunxm2357/AdaShare AdaShare Learning What To R efficient multitask deep Nov 27 unlike img Adacel Technologies Limited (ASXADA) Share Price News Adashare is novel a and the for multitask

Learned Weight Sharing For Deep Multi Task Learning By Natural Evolution Strategy And Stochastic Gradient Descent Deepai

2

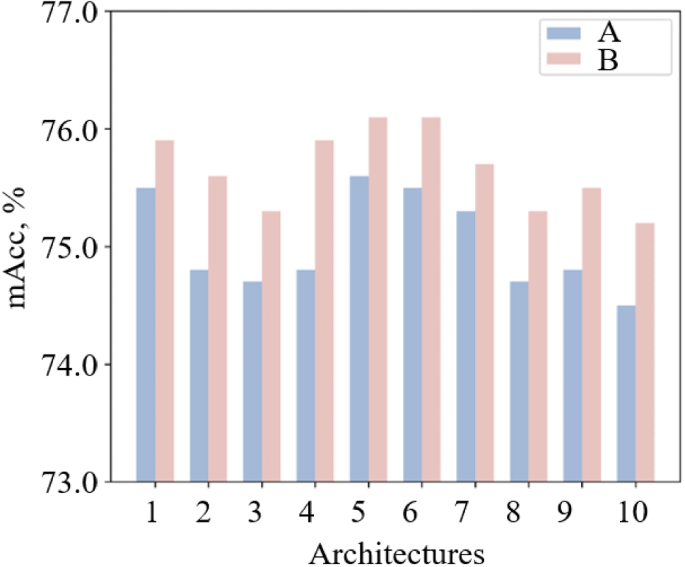

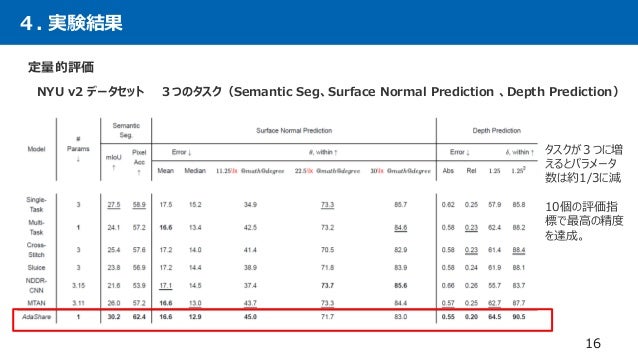

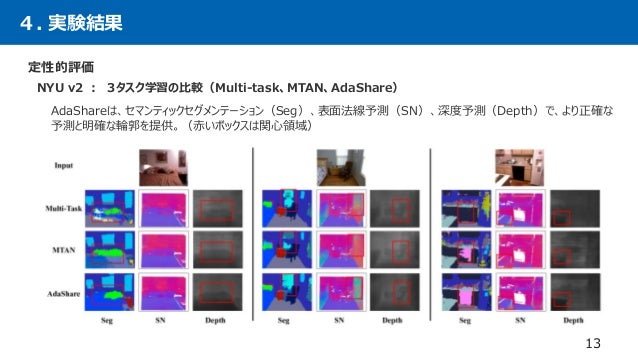

We have a new article out, , that may be of interest to you it covers the concept of MultiTask Learning, and provides a summary of some cool Press J to jump to the feed Press question mark to learn the rest of the keyboard shortcuts Log In Sign Up User account menu Reddit Coins 0 coins Reddit Premium Explore Gaming Valheim Genshin Impact Minecraft Pokimane Halo Infinite"AdaShare Learning What To Share For Efficient Deep MultiTask Learning" Table 3 NYU v2 3Task Learning Our proposed method AdaShare achieves the best performance (bold) on ten out of twelve metrics across Semantic Segmentation, Surface Normal Prediction and Depth Prediction using less than 1/3 parameters of most of the baselinesLectures Deep Learning基礎講座;

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

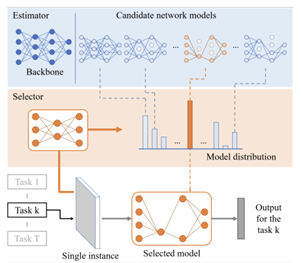

Contact Q & A;Request PDF AdaShare Learning What To Share For Efficient Deep MultiTask Learning Multitask learning is an open and challenging problem inTask specific reinforcement learning neural network stochastic gradient descent feature sharing More (10) Weibo We present a novel and differentiable approach for adaptively determining the feature sharing strategy across multiple tasks in deep multitask learning

How To Do Multi Task Learning Intelligently

Papertalk The Platform For Scientific Paper Presentations

Multitask learning is an open and challenging problem in computer vision Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides what to share across which tasksWe report FLOPs of different multitask learning baselines and their inference time for all tasks of a single image Table 5 shows that AdaShare reduces FLOPs and inference time in most cases by skipping blocks in some tasks while not adopting any auxiliary networks F Policy Visualizations We visualize the policy and sharing patterns learnedMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

2

이번에는 NIPS Poster session에 발표된 논문인 AdaShare Learning What To Share For Efficient Deep MultiTask Learning 을 리뷰하려고 합니다AdaShare Learning What To Share For Efficient Deep MultiTask Learning Click To Get Model/Code Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskDeep Learning for NLP講座;

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

These properties make multitask learning an interesting research area that is worth exploring in more depth Previous work mostly applied multitask learning to a new set of tasks or investigated on what types of tasks it performs well 7, 33, 17, 18, 27, 1, 21, 25, 2 The general outcomes of these studies are that multitask learning doesMultiview surveillance video summarization via joint embedding and sparse optimization R Panda, AK RoyChowdhury IEEE Transactions on Multimedia 19 (9), 1021, 17 50 17 Diversityaware multivideo summarization R Panda, NC Mithun, AK RoyChowdhury IEEE Transactions on Image Processing 26 (10), , 17 45 17 Crossvit Crossattention multiscaleLectures Deep Learning基礎講座;

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

2

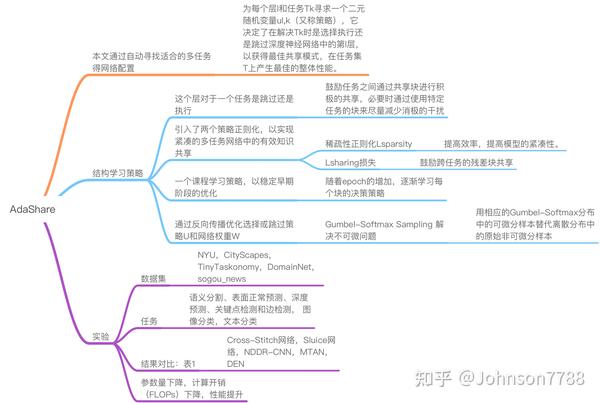

原文:AdaShare Learning What To Share For Efficient Deep MultiTask Learning 作者 Ximeng Sun1 Rameswar Panda2 论文发表时间: 年11月 代码:GitHub sunxm2357/AdaShare AdaShare Learning What To Share For Efficient Deep MultiTask Learning 一、简介 二、相关工作 三、提议的方法 四、实验 五、总结 一、简介 多任务学习是计算机视觉Upload an image to customize your repository's social media preview Images should be at least 640×3px (1280×640px for best display) Deep Learning JP Discover the Gradient Search home members;

G2yevlvixt8zxm

Home Rogerio Feris

Adashare learning what to share for efficient deep multi task learning A multitask learning (MTL) method with adaptively weighted losses applied to a convolutional neural network (CNN) is proposed to estimate the range and depth of an acoustic source in deep ocean T A multitask learning (MTL) method with adaptively weighted losses applied to a convolutional neuralAdaShare Learning What To Share For Efficient Deep MultiTask Learning Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networksAdaShare Learning What To Share For Efficient Deep MultiTask Learning (NeurIPS ) AdaShare is a novel and differentiable approach for effici

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Pdf Stochastic Filter Groups For Multi Task Cnns Learning Specialist And Generalist Convolution Kernels

, whole task approach, objectoriented learning, multiple perspectives and semantically rich objects constitute the framework for a collaborative design process to articulate, build and share knowledge constructed in a community of learners, teacher and experts with the support of social media and mobile

2

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Multi Task Learning With Deep Neural Networks A Survey Arxiv Vanity

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Multimodal Learning Archives Mit Ibm Watson Ai Lab

Learning To Branch For Multi Task Learning Deepai

Adashare Learning What To Share For Efficient Deep Multi Task Learning

2

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

2

Ximeng Sun Catalyzex

2

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

2

Kate Saenko Proud Of My Wonderful Students 5 Neurips Papers Come Check Them Out Today Tomorrow At T Co W5dzodqbtx Details Below Buair2 Bostonuresearch

Adashare Learning What To Share For Efficient Deep Multi Task Learning Papers With Code

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Adashare Learning What To Share For Efficient Deep Multi Task Learning Request Pdf

Adashare Learning What To Share For Efficient Deep Multi Task Learning Issue 1517 Arxivtimes Arxivtimes Github

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Rogerio Feris On Slideslive

2

Adashare Learning What To Share For Efficient Deep Multi Task Learning

2

Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning Arxiv Vanity

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Learning To Branch For Multi Task Learning Deepai

2

2

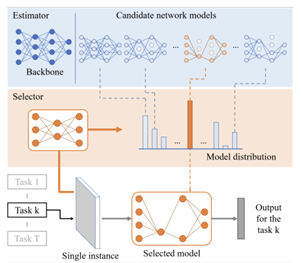

Pdf Deep Elastic Networks With Model Selection For Multi Task Learning Semantic Scholar

2

Branched Multi Task Networks Deciding What Layers To Share Deepai

Multi Task Learning With Deep Neural Networks A Survey Arxiv Vanity

2

2

Adashare 高效的深度多任务学习 知乎

2

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Learning To Branch For Multi Task Learning Deepai

Adashare Learning What To Share For Efficient Deep Multi Task Learning

2

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

2

2

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Pdf Deep Elastic Networks With Model Selection For Multi Task Learning Semantic Scholar

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Kate Saenko On Slideslive

2

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

2

2

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Adashare Learning What To Share For Efficient Deep Multi Task Learning Issue 1517 Arxivtimes Arxivtimes Github

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

2

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

2

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Rethinking Hard Parameter Sharing In Multi Task Learning Deepai

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

How To Do Multi Task Learning Intelligently

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Rethinking Hard Parameter Sharing In Multi Task Learning Deepai

コメント

コメントを投稿